This blog post is part of a multi-series publication. Read more about how to initially set up the cloud-in-a-box system in the first part of this blog post: Let’s put the cloud in a box

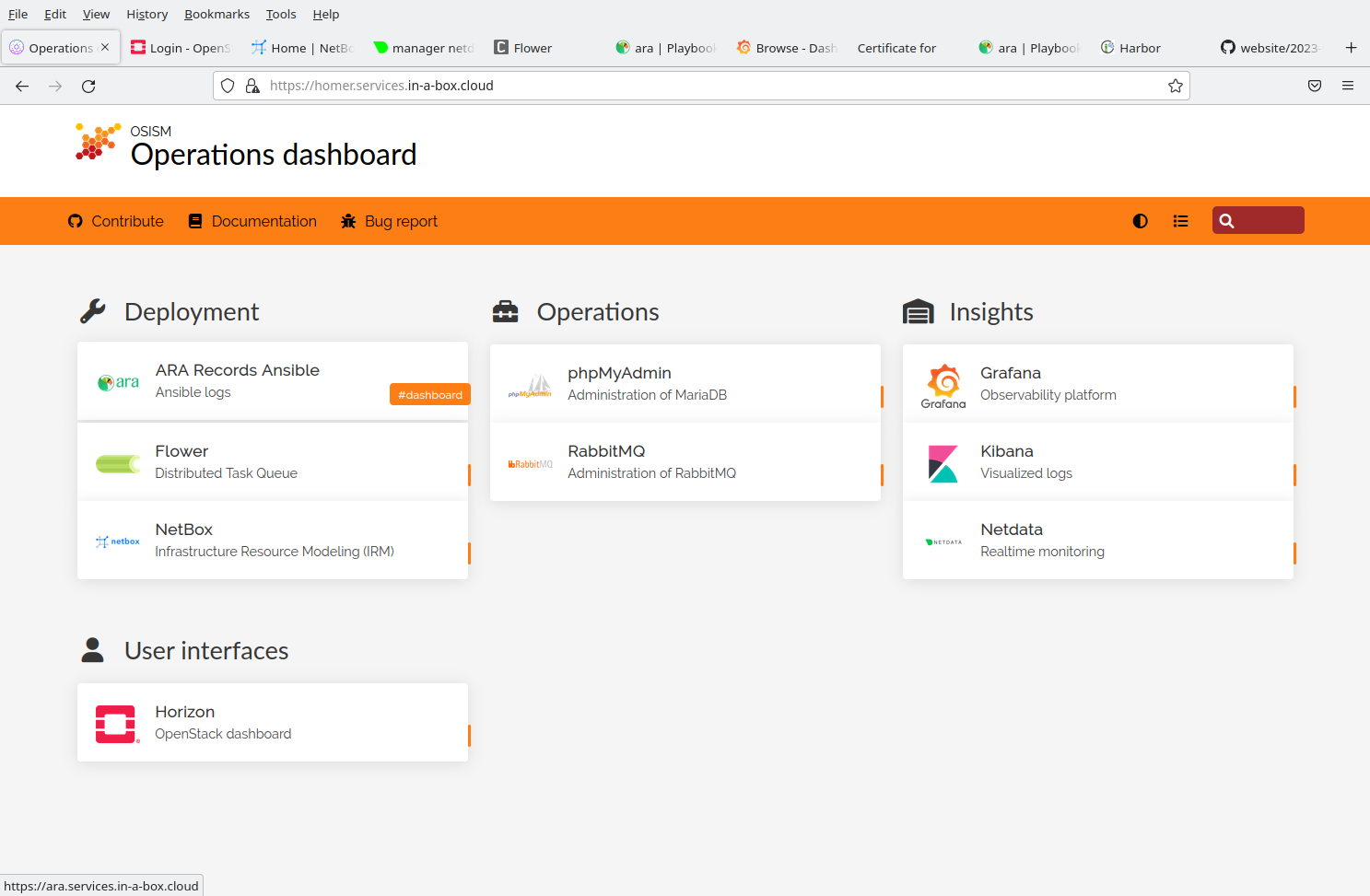

There are a number of web interfaces available for exploration of your cloud; they are listed in the documentation of the cloud-in-a-box on the OSISM repo on github. If you just want to bookmark one page, you should use the Homer dashboard which has links to all the other web interfaces.

The cloud comes preconfigured with a test project, the smaller half of the standard SCS flavors,

a CirrOS and an Ubuntu image. It also has a public network created that allows created VMs

to talk to the network that your CiaB host is connected to (and to the internet if outgoing

connections to the internet are available on the CiaB host) and allocate floating IPs from.

The OpenStack core service are deployed as well as Octavia and Designate.

The documentation describes the easiest way to access use the command line interface:

When you connect to the Cloud-in-a-Box manager system via SSH, you can use the container there

with the installed OpenStack CLI tools and the configuration is already done

for you. Just export OS_CLOUD=test or export OS_CLOUD=admin to access the

OpenStack API with normal user or admin privileges respectively via the

command line interface tool openstack.

You can also do it from the wireguard-connected client directly. Install the

python3-openstackclient client tools there (from your distribution or via pip)

and configure the cloud in your ~/.config/openstack/clouds.yaml and secure.yaml.

You can do by copying over clouds.yml and secure.yml from /opt/configuration/environments/openstack/

on the CiaB manager and also /etc/ssl/certs/ca-certificates.crt to avoid

SSL errors. You can move the latter into a directory that you have write access

to and adjust the references in clouds.yaml. You may drop the octavia and system

entries from clouds.yaml and secure.yaml and you also might rename the two remaining

entries in ciab-admin and ciab-test to avoid creating naming conflicts with other

entries that you may already have in your collection of clouds.

Here is how it look like on my system:

clouds.yaml:

clouds:

ciab-admin:

auth:

project_name: admin

auth_url: https://api.in-a-box.cloud:5000/v3

project_domain_name: default

user_domain_name: default

cacert: ~/.config/openstack/ciab-certificates.crt

identity_api_version: 3

ciab-test:

auth:

project_name: test

auth_url: https://api.in-a-box.cloud:5000/v3

project_domain_name: default

user_domain_name: default

cacert: ~/.config/openstack/ciab-certificates.crt

identity_api_version: 3

secure.yaml:

ciab-admin:

auth:

username: "admin"

password: "password"

ciab-test:

auth:

username: "test"

password: "test"

with ciab-certificates.crt in the same directory copied over from the

CiaB manager host’s /etc/ssl/certs/ca-certificates.crt.

You can of course also use Terraform and other Infrastructure-as-Code tools with these credentials.

With the test user, you have a situation similar to the one you had when you signed

up with an SCS IaaS cloud provider. So you can look at the horizon dashboard and

create resources there. The same is of course possible using API calls using the

openstack client command line tooling, the python SDK or IaC tools. One

difference though is that your cloud is a lot smaller, so you will run out

of resources (typically RAM and disk) if you start too many large VMs.

It is thus a really useful demo system. Be aware however that with preconfigured documented passwords, this system is easy prey for any hacker when connected to the internet.

You can start the openstack-health-monitor to monitor performance and availability

of your system. If you use the optimization -O to

cause it to talk directly to the service endpoints with the issued token, you need

to pass export OS_EXTRA_PARAMS="--cacert ~/.config/openstack/ciab-certificates.crt",

as these direct calls don’t use the settings from the clouds.yaml file. Note that

in my CiaB setup, no amphora image was available, so testing the load balancer

with the standard amphora provider is not possible. You can test the OVN provider

using -LP ovn, but expect errors due to https://bugs.launchpad.net/neutron/+bug/1956035.

Here’s an example run:

garloff@xps13kurt(//):/casa/src/SCS/openstack-health-monitor [0]$ export OS_CLOUD=ciab-test

garloff@xps13kurt(ciab-test):/casa/src/SCS/openstack-health-monitor [0]$ export IMG="openSUSE 15.4"

garloff@xps13kurt(ciab-test):/casa/src/SCS/openstack-health-monitor [0]$ export JHIMG="openSUSE 15.4"

garloff@xps13kurt(ciab-test):/casa/src/SCS/openstack-health-monitor [0]$ export FLAVOR="SCS-1V:2:5"

garloff@xps13kurt(ciab-test):/casa/src/SCS/openstack-health-monitor [0]$ export JHFLAVOR="SCS-1L:1:5"

garloff@xps13kurt(ciab-test):/casa/src/SCS/openstack-health-monitor [0]$ export OS_EXTRA_PARAMS="--os-cacert ~/.config/openstack/ciab-certificates.crt"

garloff@xps13kurt(ciab-test):/casa/src/SovereignCloudStack/openstack-health-monitor [1]$ ./api_monitor.sh -O -C -D -N 2 -n 6 -s -LP ovn -b -B -T -i 2

Running api_monitor.sh v1.88 on host xps13kurt.garloff.de

Using APIMonitor_1678961257_ prefix for resrcs on ciab-test (nova)

Send alarms to

Send notes to

*** Start deployment 1/2 for 1 SNAT JumpHosts + 6 VMs *** (ciab-test) --tag APIMonitor_1678961257

[...]

This will run two iterations with benchmarks creating 6 VMs plus 1 JumpHost in 2+1 Networks, running benchmarks, booting directly from the images, talking directly to the service endpoints (to save keystone roundtrips) and testing OVN loadbalancer. Again, expect errors for the OVN LB connections (after some backend members have been killed). If the health-mon fails to connect to your Jump Host, your neutron-metadata-agent has failed. (This happened sometimes on some older version of Cloud-in-a-Box.)

This was with my favorite openSUSE images, which are available from this OTC bucket

You can also run the SCS compliance checker and it will correctly report that you are lacking some of the mandatory SCS flavors.

In addition you have OpenStack admin access. So you can do things like creating flavors, register public images, or look at the hypervisor(s).

On the management host, you can maintain the system. You analyze log files or you update the kolla containers.

You can also access the Docker containers to test changes. Obviously, if you do this, you have created a state that is no longer clean from a manager perspective; in the best case, the next container refresh will undo your custom changes. In the worst case, you’ll manage to cause serious breakage.

Here are some things we have used the Cloud-in-a-Box system for:

Getting the Kubernetes Cluster Management to work with our k8s-cluster-api-provider reference implementation is a bit complicated, as the current logic to set up the capi management server does not propagate the custom CA. The custom CA then also subsequently needs to be pushed to the OpenStack cluster API provider (capo) and to the OpenStack Cloud Controller Manager (occm). While this can be done manually, it does involve quite some steps. We are working to handle this, but this will only happen after R4 is out. We plan to publish third blog article then.

For now, we would advice anyone setting up SCS environments to avoid using custom CAs but instead go for properly signed TLS certificates, for example via Let’s Encrypt.

Version history: